AI Deepfakes: Understanding the Legal Implications.

AI Deepfakes: Understanding the Legal Implications.

Imagine this: by 2025, almost 90% of online deepfake content will be non-consensual pornography.

This is a huge jump in AI misuse.

It has big legal problems that go beyond just privacy.

The fast growth of AI-generated media, like AI deepfakes, is a big challenge for laws.

We need to act fast to deal with these legal issues.

“AI deepfakes” means making fake media that looks and sounds like someone else, using AI.

This tech shows how smart AI can be.

But it also brings up big legal problems.

These problems touch on things like defamation, rights to ideas, and privacy.

With more deepfake use, we really need to update our laws to protect everyone.

Key Takeaways

- AI deepfakes involve replacing a person’s likeness and voice using advanced AI.

- 90% of deepfake content online in 2023 was non-consensual pornography.

- The rise in deepfake technology demands new legal frameworks.

- Key legal challenges include defamation, privacy violations, and intellectual property rights.

- There’s an urgent need for regulations to combat the misuse of AI-generated media.

Introduction to AI Deepfakes

AI deepfakes are a new technology that changes how we see and interact with media.

They use advanced artificial intelligence to make fake content.

This technology has grown fast, affecting our society in big ways.

Definition of AI Deepfakes

So, what are AI deepfakes? They are fake media that look real, like photos or videos.

They use machine learning to change someone’s image or voice.

This makes it hard to tell what’s real and what’s not, leading to trust issues and fake news.

Development and Evolution of Deepfake Technology

Deepfake technology has grown fast.

It started in research but now it’s everywhere.

At first, it was simple, but now it’s very realistic.

This fast growth means we need to understand its power and how it can be used wrongly.

Legal Challenges Posed by AI Deepfakes

AI deepfakes raise many legal issues because they can change and fake reality.

They touch on several legal areas, making it hard to find solutions.

New rules are needed to handle these problems.

Defamation Laws and Deepfakes

Deepfake defamation law is a big worry.

These AI-made videos and images can show people in bad situations.

This can hurt someone’s reputation and career.

The law is slow to adapt to deepfake tech.

This makes it hard to get justice for those who have been defamed.

Privacy Violations and Deepfakes

Privacy issues with AI deepfakes are serious.

They often use someone’s image or voice without asking.

This can lead to big privacy problems.

As deepfakes get better, protecting privacy gets harder.

But it’s very important to keep people’s personal info safe.

Intellectual Property Rights Issues

AI deepfakes also raise concerns about intellectual property.

They can copy or change protected works without permission.

This is a big problem for creators.

Deepfakes are easy to make and share.

This makes it hard to protect original content.

New laws are needed to keep creators’ rights safe.

Impact on the Entertainment Industry

AI deepfakes have changed the entertainment world a lot.

They affect both celebrities and people watching movies and TV.

These fake images and voices challenge stars’ rights and spread false information.

Cases Involving Celebrities

Many famous cases show how deepfakes are changing Hollywood.

Stars like Tom Cruise and Scarlett Johansson have seen their images used without permission.

This harms their careers and personal images.

Potential for Misinformation

Deepfakes can make fake videos that look real.

This is a big problem for spreading lies in the entertainment world.

It can confuse fans and journalists, making it hard to know what’s real.

Legal Implications of AI Deepfakes

The rise of deepfake technology has led to a need for new laws worldwide.

Countries are working hard to stop the harm caused by AI deepfakes.

This includes privacy issues and losing trust in digital media.

Regulatory Frameworks Worldwide

Many countries are making new rules to control deepfakes.

In the United States, some states have laws against using deepfakes in politics and explicit content.

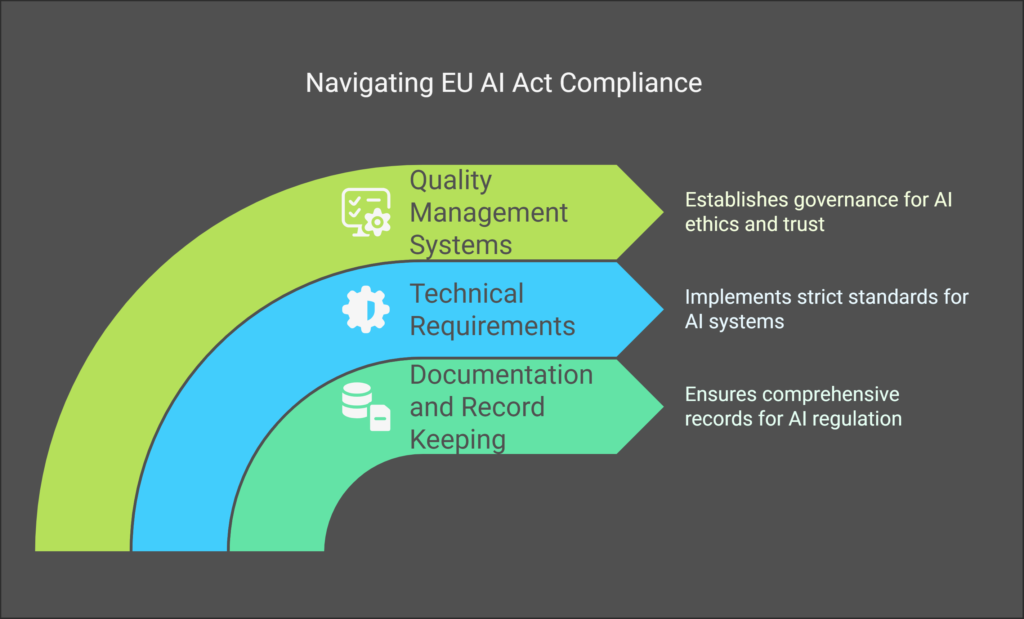

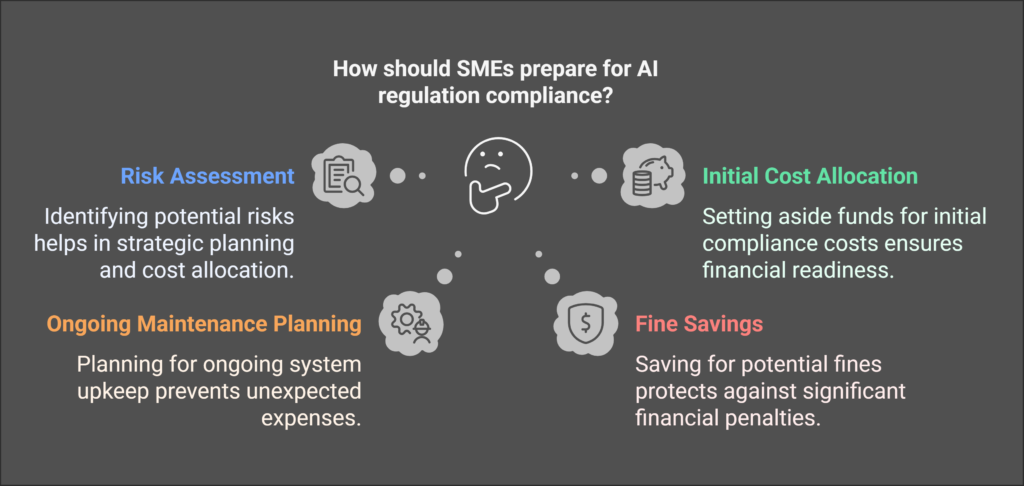

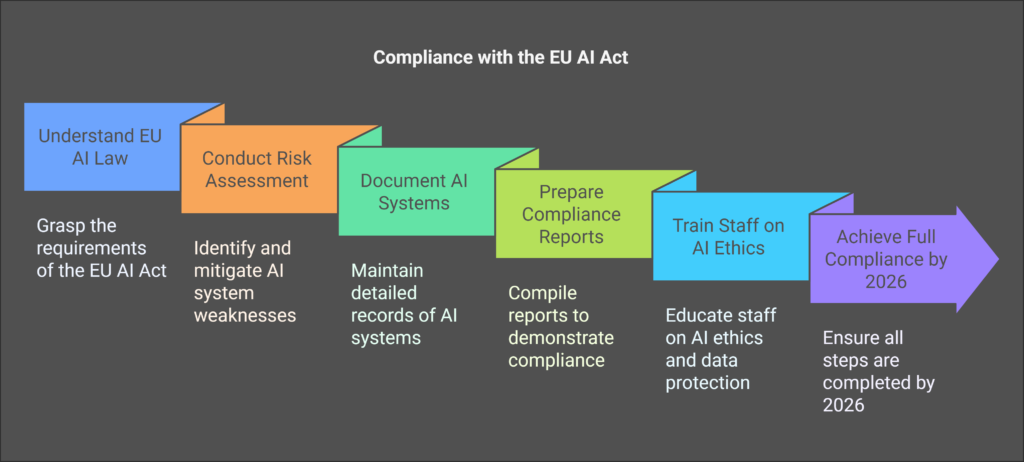

The European Union is pushing for the AI Act, which sets strict rules for deepfakes.

China and Australia are also making their own rules to stop deepfakes from being misused.

| Country/Region | Regulatory Initiative | Focus Areas |

| United States | State Laws | Political Campaigns, Explicit Content |

| European Union | AI Act | General AI Governance, Deepfake Guidelines |

| China | Countrywide Regulations | Content Authenticity, Media Integrity |

| Australia | National Policies | Digital Identity, Media Usage |

Proposed Legislation and Policies

Many new laws are being made to deal with deepfakes.

These laws aim to stop bad uses of deepfakes and protect people’s digital identities.

The United Kingdom’s Online Safety Bill is an example, aiming to keep harmful deepfakes off the internet.

Japan is also thinking about strong rules for AI-generated content.

These efforts show that countries worldwide agree on the need for action against deepfakes.

Creating strong laws and standards is key to fighting deepfakes.

It helps protect people and ensure justice for those affected.

Ethical Guidelines Surrounding Deepfake Technology

Deepfake technology is advancing fast, raising many ethical concerns.

One big issue is consent.

People often find their images used without their okay, which raises questions about their rights.

Deepfakes can also cause harm, like blackmail and spreading false information, affecting many.

Another concern is the trustworthiness of digital media.

Deepfakes make it hard to know what’s real and what’s not.

This can hurt public trust, democracy, and how we share information.

To tackle these problems, we need clear rules for using AI deepfakes.

These rules should cover getting consent, checking media facts, and holding people accountable for misuse.

By setting global standards, we can manage the ethics of deepfake tech and reduce its harm.

| Ethical Concerns | Considerations | Guidelines for AI Deepfake Use |

| Consent | Ensure individuals have given explicit permission | Implement stringent consent protocols |

| Potential for Harm | Address the risks of blackmail, defamation, and misinformation | Establish accountability for misuse |

| Authenticity | Maintain the integrity of media content | Validate media integrity rigorously |

| Public Trust | Preserve trust in digital information | Set global ethical standards |

AI Deepfakes in the Legal Field

AI technology is growing fast, and deepfakes are becoming a big worry in law.

These fake videos and sounds can look very real.

They can make people question the truth of important evidence in court.

This makes legal systems rethink how they check and trust evidence.

It’s a big change needed because of deepfakes.

Impact on Legal Proceedings

Deepfakes in court are a big problem for lawyers.

They can be used to trick people or change what witnesses say.

This makes the legal process harder.

Prosecutors and defense lawyers need to learn more about spotting and checking deepfakes.

Knowing about deepfakes helps keep trials fair.

Use of Deepfakes as Evidence

Using deepfakes as evidence in court is very tricky.

They can look so real that they might fool anyone.

This could ruin fair trials.

Courts need to find ways to check this evidence well.

They need new tools to spot fake content.

Keeping up with tech is key to keeping justice fair.

Protecting Intellectual Property and Publicity Rights

Deepfake technology has become a big worry for protecting rights.

It’s mainly because people’s images and creative work are being used without permission.

This has led to more cases of intellectual property infringement.

High-profile lawsuits have shown how urgent it is to have strong laws.

These laws need to protect people from misuse of their images and work.

Case Studies and Legal Precedents

Celebrities like Scarlett Johansson have faced issues with their images being used without consent.

These cases are important because they show the need for new laws.

They help us understand how courts are trying to protect people’s rights.

Future Legal Trends

Experts think we’ll see better laws to fight deepfake threats in the future.

Lawmakers are working on new rules to handle digital manipulation and unauthorized content.

By looking at current cases, we can see how laws are changing.

It’s important to create strong laws fast because deepfake tech is getting better quickly.

We need to keep working on protecting rights against deepfakes.

New laws will aim to keep up with tech while protecting our rights online.

| Case | Involvement | Outcome |

| Scarlett Johansson | Unauthorized deepfake videos | Raised awareness, push for new laws |

| Tom Cruise | Deepfake impersonation | Increased scrutiny on privacy laws |

| Keanu Reeves | Misappropriated likeness | Legal action and advocacy for rights |

Combating Deepfake Technology

Fighting deepfake technology needs a mix of new tech and strict laws.

We must use advanced tools and strict legal rules to tackle fighting deepfakes.

This is key to stopping the misuse of deepfake tech.

Technological Solutions

New tech is leading the fight against deepfakes.

Tools like deepfake detection algorithms and blockchain help verify digital content.

AI models also help tell real from fake media.

These tools keep getting better, thanks to ongoing updates.

Big tech companies like Google and Microsoft, along with places like MIT, are key players in this fight.

Legal Measures and Enforcement

From a legal standpoint, we need to enforce laws better and create new ones to keep up with deepfake tech.

This is a job for both national and international efforts.

The U.S., EU, and other places are working together to fight deepfakes.

Legal steps include harsh penalties for those who misuse deepfakes.

We also need ways to quickly check if content is real or fake.

And we need laws that can change as tech evolves.

| Aspect | Technological Solutions | Legal Measures |

| Tools | Deepfake detection algorithms, Blockchain, AI models | Enforcement of existing laws, New legislation |

| Key Players | Google, Microsoft, MIT | U.S. authorities, EU, International organizations |

| Approach | Proactive, continuous updates | Reactive and preventative, international cooperation |

Conclusion

AI deepfakes pose big challenges that need a strong and changing legal response.

As this tech grows, so must our laws to fight deepfake risks.

Deepfakes touch many legal areas, like defamation and privacy, making strong rules key.

The future of AI deepfakes in law depends on how well laws can keep up.

Governments need to make clear, forward-looking laws.

This way, deepfakes won’t harm our rights or trust in society.

With the right laws and ethics, we can keep up with tech while protecting our rights.

To tackle deepfake issues, we need both tech and law to work together.

We must keep improving detection tech and have strict laws and enforcement.

Working together, we can protect our digital world and keep laws up to date with tech.

FAQ

What are AI deepfakes?

How has deepfake technology evolved?

What legal challenges do deepfakes pose?

How have deepfakes impacted the entertainment industry?

What regulatory frameworks are in place to address deepfake challenges?

What are the ethical guidelines surrounding deepfake technology?

How do deepfakes impact the legal field, particular concerning legal proceedings?

How are intellectual property and publicity rights protected against deepfakes?

What technological solutions exist to combat deepfakes?

What legal measures can be enforced to combat the misuse of deepfakes?

What is a deepfake?

A deepfake is a type of synthetic media where a person’s likeness is digitally manipulated using artificial intelligence and deep learning technologies.

The term “deepfake” combines “deep learning” and “fake,” highlighting how these convincing forgeries are created using sophisticated AI algorithms, particularly generative adversarial networks.

Deepfake technology can swap faces in videos, manipulate speech, or create entirely fabricated scenarios that appear authentic.

Since their emergence, deepfakes have evolved from crude manipulations to remarkably realistic fake content that can be difficult to distinguish from genuine online content, raising significant legal and ethical implications for society.

How do deepfakes work?

Deepfakes function through advanced AI technologies known as generative adversarial networks (GANs).

This deepfake technology involves two competing neural networks: one that creates the fake images or videos, and another that tries to detect the forgery.

Through this competitive process, the system continuously improves at creating more convincing fakes.

The technology requires substantial training data—typically numerous images or video frames of the target person—to learn facial expressions, movements, and speech patterns.

Modern deepfake systems powered by artificial intelligence and deep learning can now generate highly convincing deepfake videos with minimal source material, making the distribution of deepfakes increasingly accessible to those using AI without specialized technical expertise.

What are the main legal issues surrounding deepfakes?

The legal issues surrounding deepfakes are complex and evolving.

Current legal challenges include addressing defamation when someone’s likeness is used without consent, particularly in sexually explicit deepfakes.

Privacy laws are often challenged as deepfakes frequently involve the unauthorized use of personal data.

Intellectual property concerns arise with the manipulation of copyrighted images or likenesses.