GDPR Compliance for AI-Powered Tools

GDPR Compliance for AI-Powered Tools

As Romanian businesses use more AI, knowing how to follow GDPR for AI tools is key.

Did you know AI can make compliance work 50 times faster than old methods?

This shows how AI can change the game in data privacy rules.

The General Data Protection Regulation (GDPR) changed how we handle personal data in 2018.

AI’s fast growth brings new chances for growth, but also new challenges in following GDPR and AI rules.

In Romania, getting good at GDPR for AI tools is more than just avoiding trouble.

It’s about winning customer trust and using privacy-friendly AI to stay ahead.

Let’s see how you can handle these rules and use AI’s power.

Key Takeaways

- AI can speed up compliance efforts by 50 times compared to manual methods;

- GDPR outlines 6 legal grounds for processing personal data;

- AI systems require large volumes of data, necessitating careful dataset compilation;

- Data retention periods must be proportional and not indefinite;

- Continuous learning AI systems raise questions about data protection;

- Transparency in AI processing is key for GDPR compliance;

- Organizations can save time by using AI for regulatory research and compliance mapping.

Understanding GDPR and Its Impact on AI Technologies

The General Data Protection Regulation (GDPR) sets strict guidelines for data handling in the European Union.

It was enacted on May 25, 2018.

It shapes how organizations collect, store, and process personal information.

This framework has significant implications for AI technologies, which often rely on vast amounts of data.

Definition and Scope of GDPR

GDPR aims to protect individual privacy rights and ensure responsible data practices.

It applies to any organization processing EU residents’ personal data, regardless of the company’s location.

The regulation grants individuals rights such as data access, erasure, and informed consent.

AI Processing Under GDPR Framework

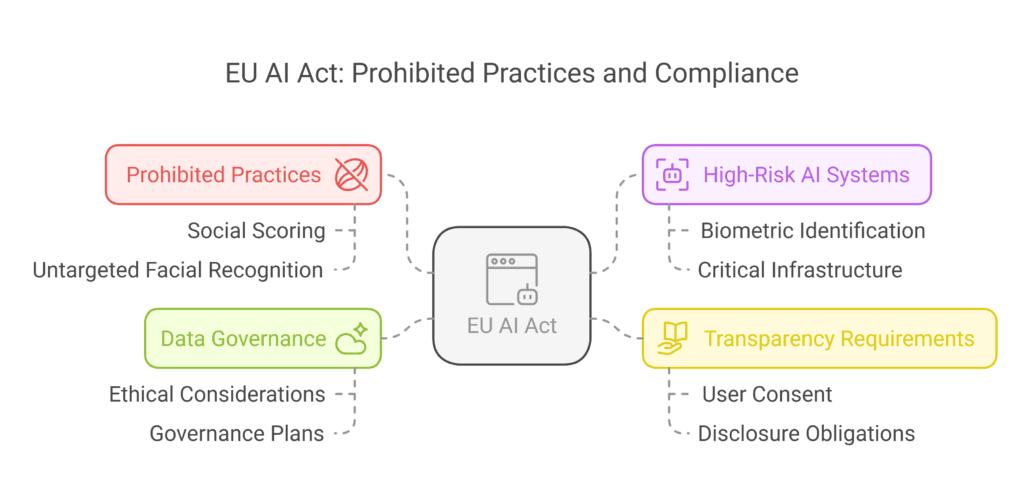

AI systems face unique challenges under GDPR.

The regulation’s emphasis on data minimization conflicts with AI’s need for large datasets.

About 70% of AI projects struggle to comply with this principle.

GDPR also requires transparency in automated decision-making, impacting AI applications in finance, healthcare, and hiring.

Key GDPR Principles Affecting AI Systems

Several GDPR principles directly influence AI development and deployment:

- Data minimization and purpose limitation;

- Transparency and accountability;

- Secure data processing;

- Algorithmic bias mitigation.

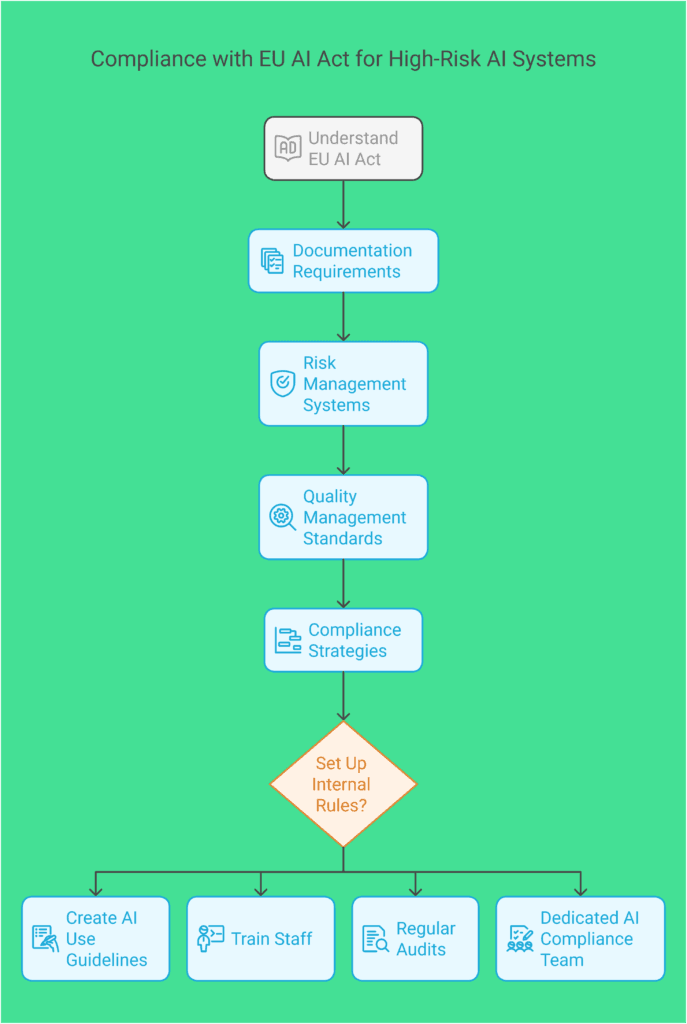

Organizations must implement robust AI governance frameworks to ensure compliance.

This includes adopting data anonymization techniques and prioritizing ai transparency and accountability.

By focusing on these areas, businesses can navigate the complex landscape of GDPR and AI integration effectively.

| GDPR Principle | Impact on AI | Compliance Strategy |

|---|---|---|

| Data Minimization | Limits dataset size | Implement data anonymization techniques |

| Transparency | Requires explainable AI | Develop ai transparency measures |

| Consent | Affects data collection | Design clear consent mechanisms |

| Security | Mandates data protection | Employ secure data processing methods |

GDPR Compliance for AI-Powered Tools

AI tools must follow GDPR when handling EU citizen data or working in the EU.

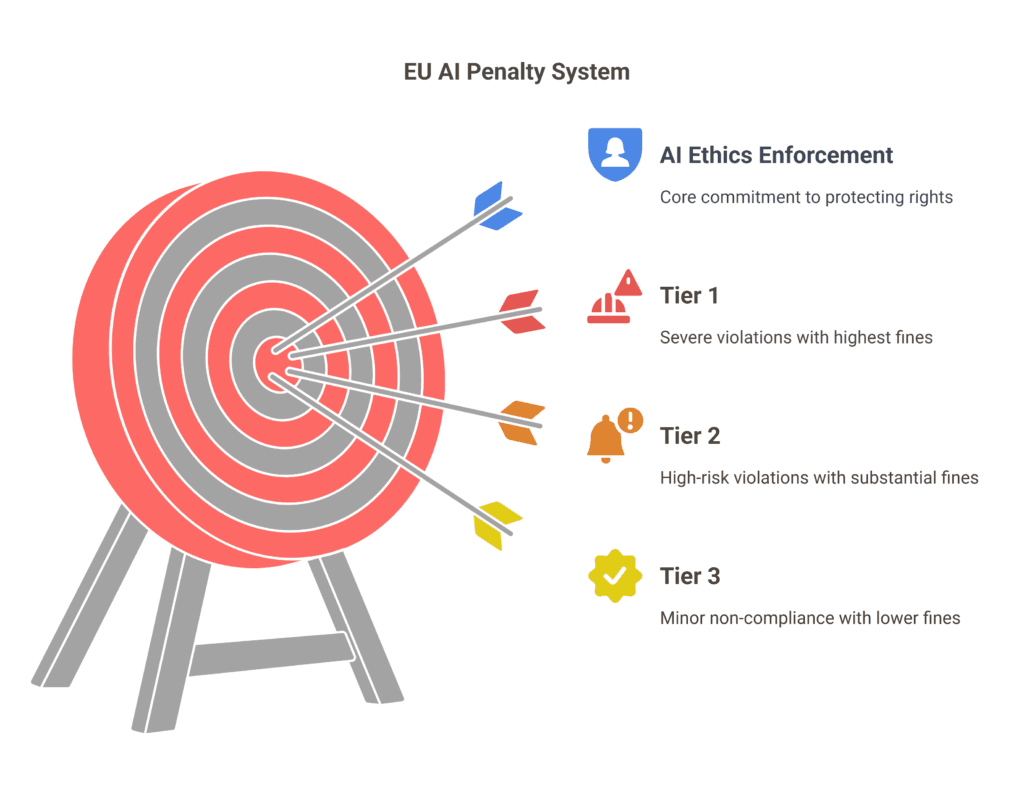

Not following this can lead to big fines, up to €10 million or 2% of annual income.

Businesses in Romania need to grasp the details of GDPR for their AI systems.

Starting with data minimization is key to responsible AI. GDPR says only use data needed for specific tasks.

AI systems should use methods like anonymization and pseudonymization to keep data safe while gaining insights.

Algorithmic fairness is critical in AI decision-making.

AI systems must let people see their data, understand how decisions were made, and have the right to be forgotten.

This openness is essential for trust and meeting GDPR standards.

Data protection impact assessments are needed for risky AI activities.

These assessments help spot and fix privacy risks.

Companies must do regular checks and use strong security to avoid data leaks.

| GDPR Requirement | AI Implementation |

|---|---|

| Explicit Consent | Clear, specific consent for AI data processing |

| Data Minimization | Use only necessary data for AI models |

| Transparency | Explainable AI decision-making processes |

| Right to Erasure | Ability to remove personal data from AI systems |

To uphold artificial intelligence ethics, companies must train staff on privacy, bias, and ethics.

Using access controls and a privacy-first design are key to integrating data protection into AI tools.

Data Privacy Requirements for AI Systems

AI systems must follow strict data privacy rules under GDPR.

These rules protect personal info and let AI tech grow.

It’s key for Romanian businesses using AI tools to know these rules.

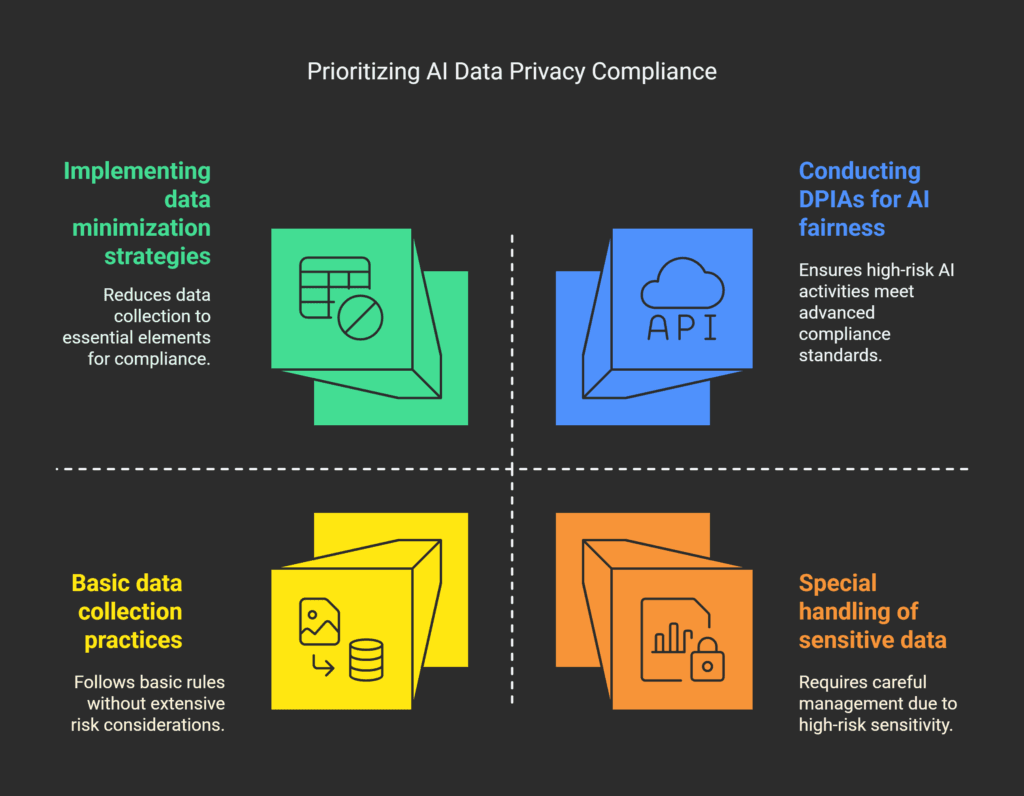

Data Minimization and Purpose Limitation

GDPR says organizations should only collect data needed for specific tasks.

This rule, data minimization, is key for AI systems that need lots of data.

You must figure out the least amount of personal data your AI tools need.

Purpose limitation means data can only be used for its original purpose.

Your AI rules should make sure data isn’t misused.

This makes AI more trustworthy and ethical.

Special Categories of Personal Data

AI systems handling sensitive data, like health info or biometrics, need extra care.

You must have strong security and get clear consent for these data types.

Data Protection Impact Assessments (DPIAs)

DPIAs are needed for high-risk AI activities.

They help spot and fix data protection risks.

Your DPIA should check on AI fairness and GDPR compliance.

Doing DPIAs shows you’re serious about safe AI use.

It protects people’s rights and makes sure your AI meets legal and ethical standards.

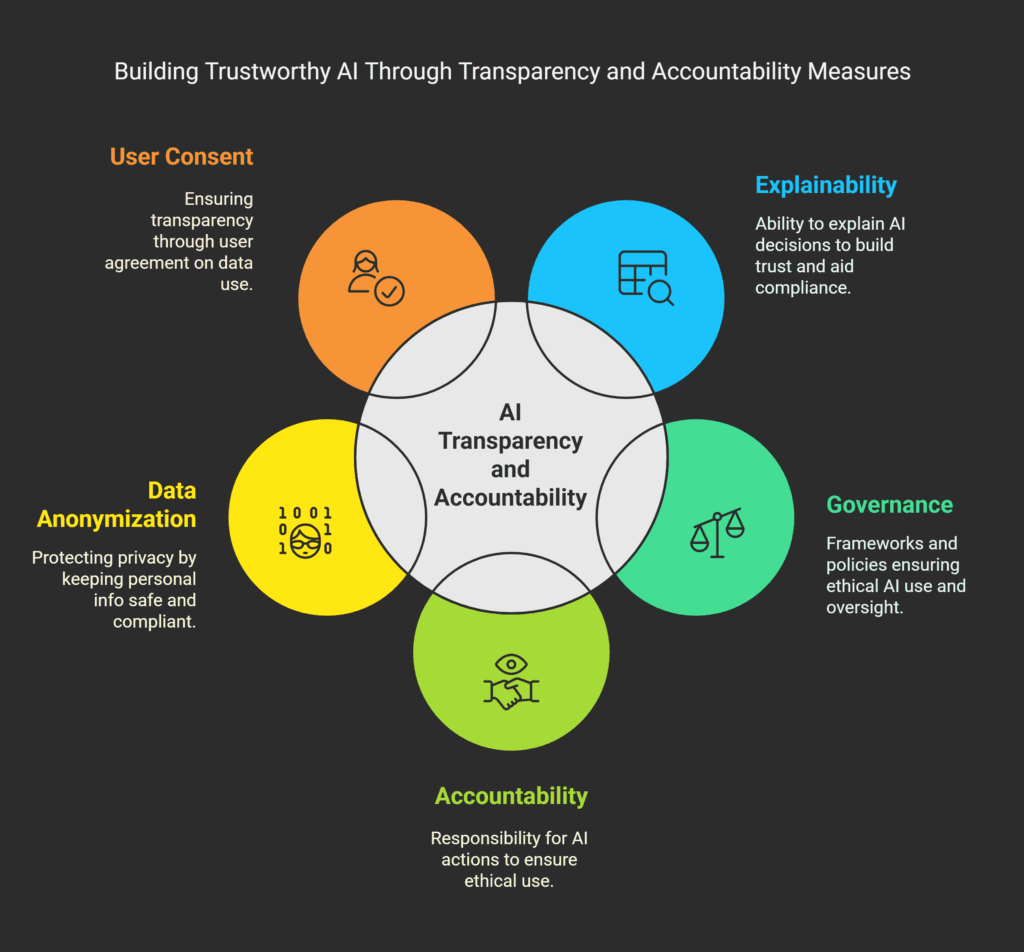

AI Transparency and Accountability Measures

AI transparency is key to trustworthy AI systems.

It includes explainability, governance, and accountability.

As AI models grow more complex, keeping things transparent gets harder.

Data anonymization is vital for privacy in AI.

It keeps personal info safe while AI works well.

This helps Romanian businesses meet GDPR rules.

User consent is essential for AI transparency.

Companies must tell users how data is used and get their okay.

This builds trust and follows data protection laws.

Companies can use many tools for AI transparency:

- Explainability tools;

- Fairness toolkits;

- Auditing frameworks;

- Data provenance tools.

These tools help with different parts of AI transparency.

They help businesses make AI systems more accountable.

| Transparency Requirement | Description | Importance |

|---|---|---|

| Explainability | Ability to explain AI decisions | Builds trust, aids compliance |

| Interpretability | Understanding how AI works | Enhances user confidence |

| Accountability | Responsibility for AI actions | Ensures ethical use of AI |

By using these steps, Romanian businesses can make trustworthy AI.

They will follow GDPR and keep user trust and privacy safe.

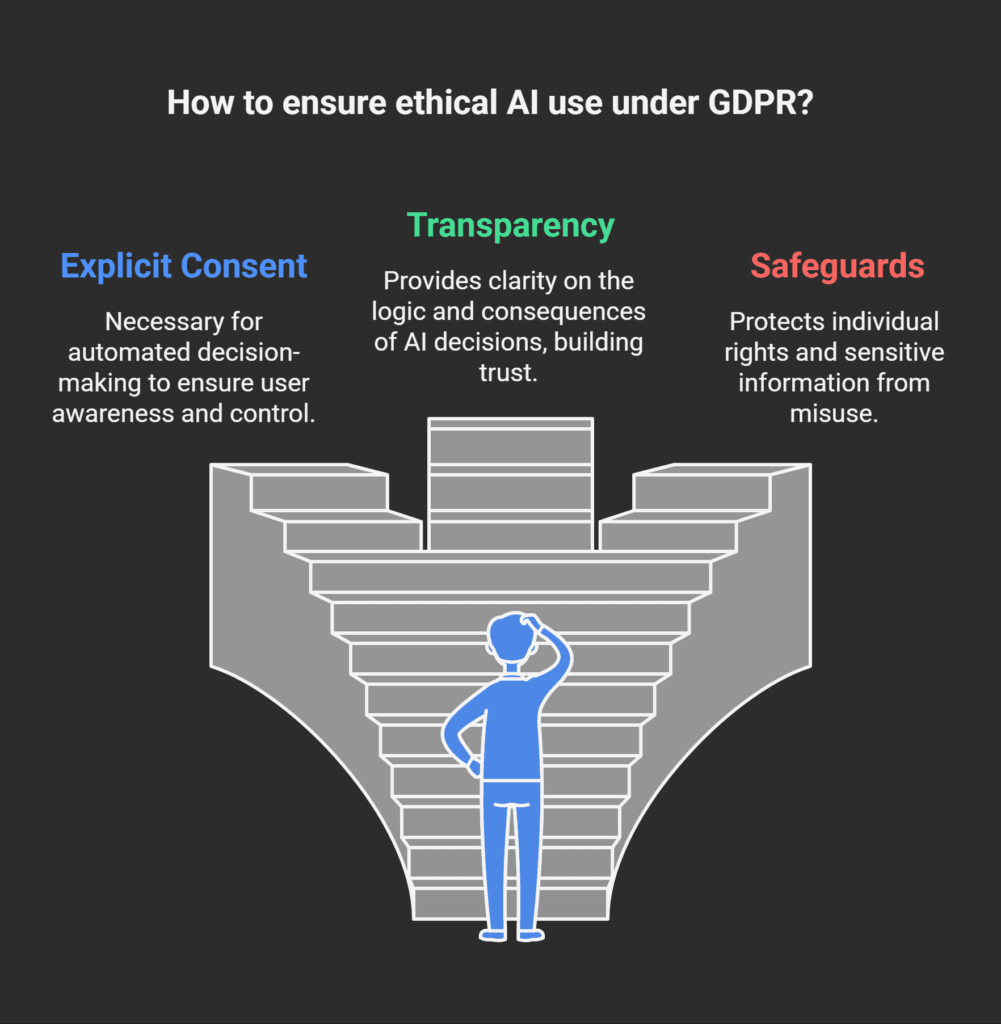

Automated Decision-Making and Profiling Rights

AI tools have made automated decision-making and profiling big issues in data protection.

GDPR has strict rules for these, focusing on ethics and clear AI systems.

Individual Rights Under GDPR

GDPR gives you rights over automated processing of your data.

You can ask to see your data, stop its use, or fix or delete it.

AI must protect these rights, mainly with sensitive info.

Automated Processing Restrictions

Companies need your clear consent for automated decisions on personal data.

They must tell you the reasons and possible outcomes.

This makes AI trustworthy and keeps data protection key.

| Requirement | Description |

|---|---|

| Explicit Consent | Mandatory for automated decision-making |

| Transparency | Inform about logic and consequences |

| Safeguards | Implement measures to protect rights |

| DPIAs | Regular assessments to mitigate risks |

Right to Human Intervention

GDPR gives you the right to human review in automated decisions.

This means AI can’t decide everything important in your life.

Companies must let you share your views and challenge automated decisions.

Following these rules, Romanian businesses can use AI responsibly.

They keep ethics and protect individual rights.

The aim is to make AI that’s efficient yet respects human values and privacy.

Data Security and Risk Management for AI Tools

AI tools introduce new security and risk challenges.

In Romania, companies must focus on secure data handling and managing AI risks to follow GDPR.

They need to use strong technical and organizational controls.

Technical Security Measures

Companies should use encryption, access controls, and security tests.

These steps protect AI system data from unauthorized access and breaches.

Organizational Security Controls

Good data governance is key.

This means having clear policies, procedures, and training for employees.

A solid framework helps keep compliance and lowers AI risks.

Breach Notification Requirements

GDPR requires quick breach reports. Companies must have systems for fast detection and notification.

This is very important for AI systems that handle lots of personal data.

| Risk Management Aspect | Importance |

|---|---|

| AI Accountability | 75% of CROs see AI as a reputational risk |

| Consent Management | 70% of consumers concerned about data use |

| Data Governance | 2.5x more likely to achieve compliance |

By focusing on these areas, Romanian businesses can improve their GDPR compliance for AI tools.

Proper risk management not only avoids fines but also builds customer trust and protects your reputation.

Privacy by Design in AI Development

Privacy by Design is key in AI under GDPR.

It means building data protection into AI systems from the start.

This way, you protect data rights while using AI.

To start Privacy by Design, do data protection impact assessments.

These help spot and fix risks early. 92% of companies see the need for new risk handling with AI.

AI governance frameworks are vital for Privacy by Design.

They guide AI development and use, ensuring GDPR rules are followed.

They help with the 69% of companies facing legal issues with AI.

Algorithmic transparency is also important.

It makes AI decisions clear and fair. This builds trust and stops AI bias.

AI bias mitigation strategies are key too.

They make sure AI is fair and unbiased.

Regular checks and reviews can find and fix biases.

By using these steps, you can make AI systems that respect privacy.

This not only follows GDPR but also builds trust in your AI tools.

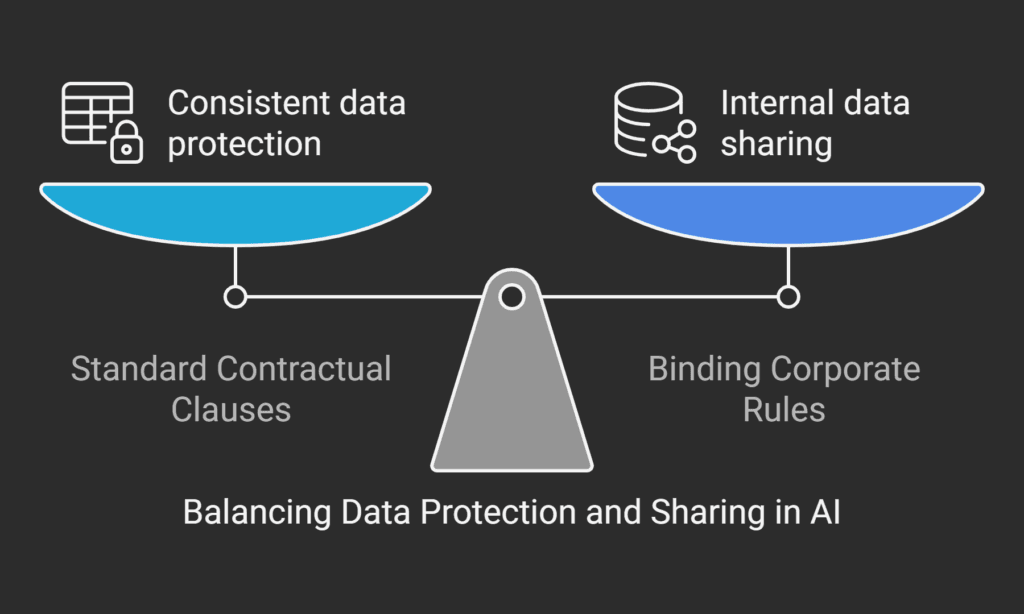

Cross-Border Data Transfers for AI Processing

AI tools often use data from different countries.

This creates legal challenges under GDPR.

Romanian businesses using AI must follow strict rules for moving data across borders.

International Data Transfer Mechanisms

GDPR restricts data transfers outside the EU to protect privacy.

Companies can use approved methods like Standard Contractual Clauses (SCCs) or Binding Corporate Rules (BCRs).

These ensure data stays safe during transfers.

Proper use of these tools is key for ethical AI governance.

Standard Contractual Clauses

SCCs are pre-approved contracts that set rules for data transfers.

They’re a popular choice for Romanian firms working with non-EU partners.

SCCs spell out data protection duties and rights.

This helps maintain AI accountability measures across borders.

Adequacy Decisions

Some countries meet EU privacy standards through adequacy decisions.

This allows easier data flows.

For AI projects, working with adequate countries can simplify compliance.

It supports AI transparency and explainability by ensuring consistent rules.

Cross-border transfers pose unique challenges for AI systems.

Data anonymization and privacy-preserving machine learning techniques are vital.

They help protect personal data while allowing AI to learn from global datasets.

Romanian companies must balance innovation with strict GDPR compliance in their AI strategies.

| Transfer Mechanism | Key Feature | Benefit for AI Processing |

|---|---|---|

| Standard Contractual Clauses | Pre-approved legal agreements | Ensures consistent data protection across borders |

| Binding Corporate Rules | Internal company policies | Facilitates data sharing within multinational AI companies |

| Adequacy Decisions | EU-approved countries | Simplifies data transfers for AI training and deployment |

Documentation and Record-Keeping Requirements

GDPR compliance for AI tools requires detailed records.

You need to document data processing, impact assessments, and security steps.

This helps show you’re following the rules and improves data handling.

To manage AI risks well, keep detailed logs of AI system use.

Record data flows, why you’re processing it, and how long you keep it.

Also, track user consent and data access requests.

These steps are key for following privacy and AI rules.

Explainable AI is very important.

You must document how AI makes decisions to be clear.

This should include how you avoid bias, showing you use AI fairly and ethically.

- Data Protection Impact Assessments: Update before major changes;

- Processing Activities Records: Monitor continuously;

- Security Measure Documentation: Outline quarterly;

- User Consent Records: Update in real-time.

Not following GDPR can lead to big fines, up to €20 million or 4% of your yearly sales.

Good documentation helps avoid these fines and makes your work smoother.

In fact, 31% of companies say they work better after keeping good records.

Conclusion

GDPR compliance is key for Romanian businesses using AI.

Ethical AI principles are the base for responsible AI.

They make sure AI respects privacy while pushing innovation.

Regular checks on AI models and privacy risk assessments are vital.

They help spot weaknesses and keep AI in line with data protection rules.

Also, clear machine learning models build trust and show a commitment to ethical AI.

Data protection by design is a big part of GDPR for AI tools.

Adding privacy safeguards early on helps avoid risks and boosts competitiveness.

The AI-enabled e-commerce market is expected to grow to $16.8 billion by 2030.

This shows how important GDPR-compliant AI is.

| GDPR Compliance Element | AI Implementation |

|---|---|

| Data Minimization | AI algorithms identify essential data |

| Transparency | AI-generated plain language notices |

| Consent Management | AI-powered platforms automate processes |

| Risk Assessment | AI conducts efficient DPIAs |

By following these GDPR-compliant AI practices, Romanian businesses can innovate while protecting individual rights in the digital world.

Contact: office@theromanianlawyers.com

FAQ

Understanding GDPR for AI tools in Romania can be tough.

This FAQ tackles main worries about ai explainability and data protection.

We’ll look at how to make AI decisions clear while following responsible ai rules.

AI audits and monitoring are key for GDPR. Regular checks help ensure AI uses only needed data.

This follows the data minimization rule. Also, GDPR says no decisions can be made just by AI that affect people.

So, add human checks and explain AI choices clearly.

Being open about ai and data handling is essential for GDPR. You must tell people how their data is used by AI.

Think about doing Data Protection Impact Assessments (DPIAs) for risky AI projects.

These help spot and fix privacy risks, making sure your AI meets GDPR standards.

For help on GDPR for AI tools in Romania, email office@theromanianlawyers.com.

Keep up with the latest in AI explainability to stay compliant and gain customer trust.

FAQ

What are the key GDPR principles that affect AI systems?

GDPR principles for AI systems include data minimization and purpose limitation.

These mean AI systems should only collect and use data needed for their purpose.

They should also keep data only as long as necessary.

How can Romanian businesses ensure algorithmic fairness in their AI systems?

Romanian businesses should use bias mitigation techniques and audit AI models regularly.

They should also use diverse training data and transparent machine learning models.

This helps ensure fairness in AI systems.

What is a Data Protection Impact Assessment (DPIA) and when is it required for AI systems?

A DPIA is a process to identify and minimize data protection risks in AI systems.

It’s needed when an AI system poses a high risk to individuals’ rights and freedoms.

This includes systems that make automated decisions or handle sensitive data on a large scale.

How can businesses implement privacy-preserving machine learning techniques?

Businesses can use data anonymization, differential privacy, federated learning, and secure multi-party computation.

These methods help protect individual privacy while allowing AI processing to comply with GDPR.

What are the requirements for obtaining valid user consent for AI processing under GDPR?

To get valid consent for AI processing, businesses must ensure it’s freely given and specific.

Users must be clearly told how their data will be used in AI systems.

Consent should be given through a clear affirmative action.

How can Romanian businesses ensure AI transparency and accountability?

Romanian businesses can ensure AI transparency by using explainable AI and maintaining detailed documentation.

Regular audits of AI systems and clear communication to data subjects are also key.

This helps maintain accountability.

What are the restrictions on automated decision-making under GDPR?

GDPR limits automated decision-making that affects individuals legally or significantly.

Such processing needs explicit consent, is necessary for a contract, or is authorized by law.

Individuals have the right to human intervention and to contest decisions.

What security measures should be implemented to protect personal data processed by AI systems?

AI systems should have data encryption, access controls, and regular security testing.

Robust policies and procedures are also essential.

Businesses should protect against adversarial attacks and ensure training data integrity.

How can Privacy by Design be incorporated into AI development?

Privacy by Design should be considered from the start of AI system design.

This includes minimizing data collection and implementing strong security measures.

It also involves ensuring data accuracy and limiting retention.

Features that support individual rights are also important.

What are the implications of cross-border data transfers for AI processing under GDPR?

Cross-border data transfers for AI processing must follow GDPR rules.

This might involve using Standard Contractual Clauses or obtaining Adequacy Decisions.

Businesses must ensure the recipient country’s data protection is similar to the EU’s.

What documentation should Romanian businesses maintain for their AI systems to demonstrate GDPR compliance?

Romanian businesses should keep records of processing activities, Data Protection Impact Assessments, and security measures.

They should also document consent, data breaches, and AI governance frameworks.

This includes AI risk management, bias mitigation, and measures for transparency and accountability.