Evaluating the Cost of Non-Compliance with AI Laws in the EU

Evaluating the Cost of Non-Compliance with AI Laws in the EU

Are you ready for huge financial hits for ignoring AI rules in the European Union?

The EU Artificial Intelligence Act brings a complex set of rules.

These rules could hurt your business’s profits a lot.

Understanding non-compliance with AI laws in the EU is key.

The rules are strict for AI development and use.

If you break them, you could face fines up to €35 million or 7% of your global sales.

Businesses in Romania and companies worldwide in the EU must take these AI rules seriously.

The financial risks are big. So, following these rules is not just a must, but a smart move.

Key Takeaways

- Maximum fines can reach €35 million or 7% of global turnover;

- Three-tiered penalty system based on violation severity;

- High-risk AI systems face stringent compliance requirements;

- Penalties designed to be effective and dissuasive;

- Compliance costs estimated at 17% overhead on AI spending.

Understanding the EU AI Act’s Penalty Framework

The European Union has created a detailed plan to tackle algorithmic bias and ai accountability lapses with its AI Act.

As AI grows, from 58% use in 2019 to 72% by 2024, strong rules are needed.

Regulatory Authority Overview

The AI Act sets up a detailed system to handle AI transparency and oversight failures.

Key parts of this system include:

- Comprehensive risk assessment methodology;

- Proactive monitoring of AI system implementations;

- Stringent compliance requirements.

Key Stakeholders and Enforcement Bodies

Many groups are key in making sure AI rules are followed across Europe. The main players are:

| Stakeholder | Responsibility |

|---|---|

| European Commission | Overall regulatory supervision |

| National Authorities | Local implementation and enforcement |

| AI Providers | Compliance and risk mitigation |

Scope of Application

The EU AI Act covers a wide range of AI system providers, including:

- Providers within the EU market;

- Importers and distributors;

- Product manufacturers using AI technologies.

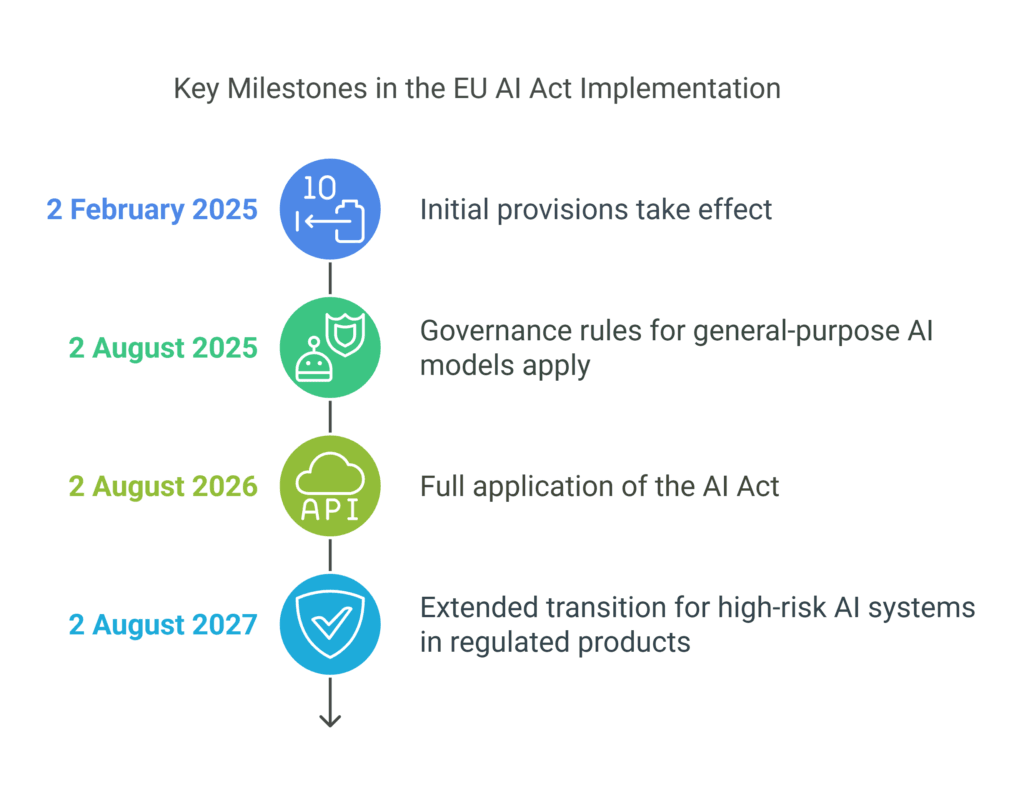

The Act starts on 1 August 2024 and will be fully in place by 2 August 2026.

Companies must get ready for strict rules to avoid fines.

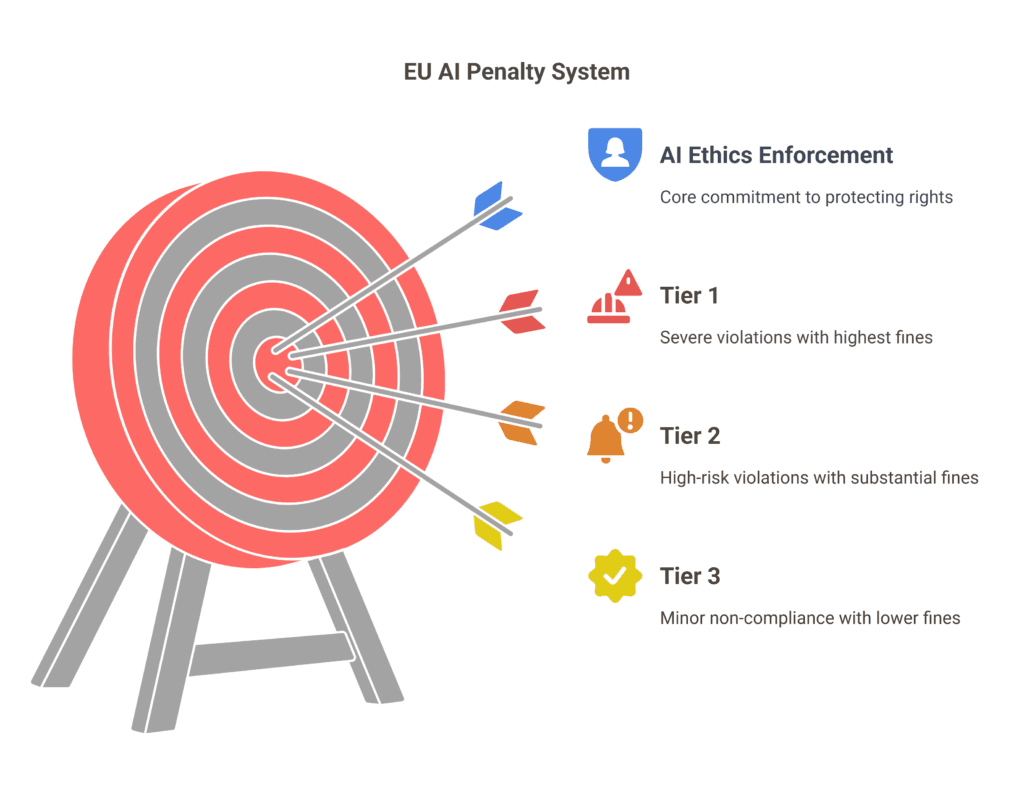

The Three-Tier Penalty System for AI Violations

The European Union has a detailed three-tier penalty system for AI ethics and accountability.

This system ensures penalties match the severity of violations.

It’s part of the European AI governance framework.

The penalty tiers are designed to handle different levels of non-compliance:

- Tier 1 (Severe Violations): Fines up to €35 million or 7% of global turnover;

- Tier 2 (High-Risk Violations): Fines up to €20 million or 4% of global turnover;

- Tier 3 (Minor Non-Compliance): Fines up to €10 million or 2% of global turnover.

Your organization needs to know these AI liability frameworks to avoid big financial risks.

The system targets specific problematic practices, including:

- Subliminal manipulation techniques;

- Exploitation of vulnerable populations;

- Unauthorized biometric identification systems;

- Social scoring mechanisms.

AI ethics enforcement is key as these penalties show the EU’s commitment to protecting individual rights.

Organizations must have strong risk management strategies to meet these complex regulatory needs.

By 2026, member states will have solid AI governance systems in place.

This makes proactive compliance a legal must and a strategic move for businesses in the European market.

Maximum Penalties and Financial Implications

The EU AI Act has a strict penalty system.

This could greatly affect your company’s money.

It’s key to know these fines to keep your AI transparent and avoid big money losses.

Penalties vary based on how well your AI follows the rules.

If your AI doesn’t meet standards, you could face big money penalties.

Calculation Methods for Fines

The EU has a clear way to figure out fines for AI mistakes.

They look at several things:

- How bad the AI mistake is;

- Your company’s yearly income;

- What kind of AI mistake it is;

- How much harm the AI could cause.

Impact on Company Revenue

The money impact can be huge.

For the worst AI mistakes, fines can be up to €40 million or 7% of your company’s yearly income.

These big fines show how important it is to check your AI well and be ready for problems.

Special Considerations for SMEs

The EU AI Act helps small businesses.

It has smaller fines for Small and Medium Enterprises.

This way, it keeps the rules strict but also considers if a small business can afford it.

| Violation Type | Maximum Penalty |

|---|---|

| Prohibited AI Practices | €40 million or 7% of turnover |

| High-Risk AI System Non-Compliance | €20 million or 4% of turnover |

| Providing False Information | €5 million or 1% of turnover |

Being proactive about following the rules can help avoid these money problems.

It shows you care about using AI the right way.

Non-Compliance with AI Laws in the EU: A Detailed Look

The European Union’s rules on AI mark a big step towards responsible AI use.

It’s key for companies in the EU to understand these rules well.

The AI Act sets up a system to check AI systems based on their risks and how they affect society.

Some main reasons for not following EU AI laws include:

- Not doing thorough risk checks;

- Not being clear about how AI works;

- Ignoring rules for AI accountability;

- Not following ethical AI guidelines.

The rules vary based on the AI’s risk level.

High-risk AI systems have the toughest rules.

Companies need to be very careful to avoid big fines.

| Risk Category | Compliance Requirements | Potential Penalties |

|---|---|---|

| Unacceptable Risk | Complete Prohibition | Up to €35 million |

| High-Risk Systems | Extensive Documentation | Up to 7% of Global Turnover |

| Limited Risk | Transparency Obligations | Up to €15 million |

Your company should focus on AI ethics and make strong plans for following the rules.

The EU AI Act asks for careful handling of AI, with a big focus on areas like education and law.

Also, 60% of companies don’t know the AI rules that apply to them.

This lack of knowledge is a big risk, with fines up to €30 million or 6% of global sales.

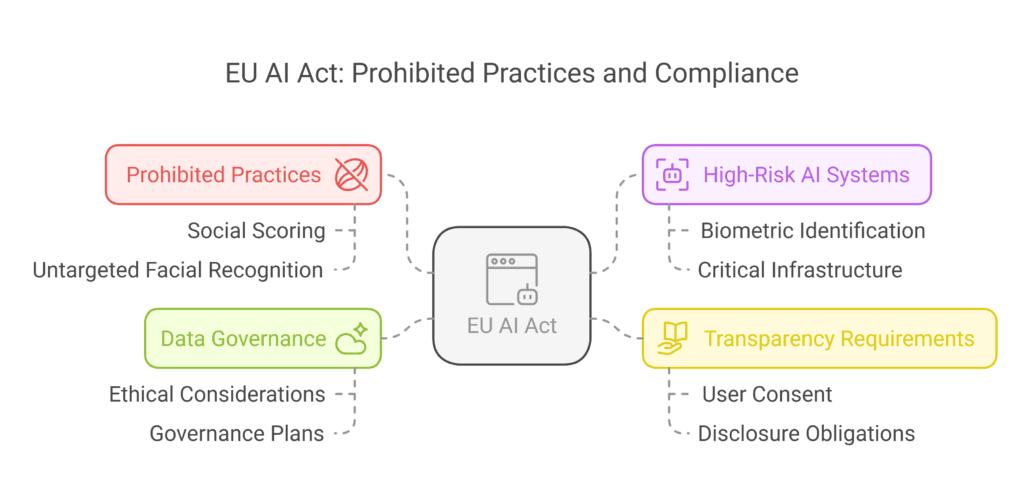

Prohibited AI Practices and Associated Penalties

The European Union’s AI Act sets strict rules for using AI.

It aims to protect human rights and ensure AI is used responsibly.

Knowing what’s banned is key for companies to stay compliant and avoid big fines.

The EU has set limits for AI systems that could harm people or values.

These rules focus on practices that might hurt users or go against ethics.

High-Risk AI Systems: Complete Check

Companies need to check their AI systems carefully.

The rules highlight certain high-risk uses that need extra attention:

- Biometric identification systems;

- Critical infrastructure management;

- Employment and workforce screening;

- Educational assessment technologies;

- Access to essential public and private services.

Transparency Violations and Consequences

Being open about AI use is critical.

Companies must tell people when they’re dealing with AI.

This ensures people know what’s happening and can give their consent.

| Violation Type | Maximum Penalty |

|---|---|

| Prohibited AI Practices | €35,000,000 or 7% global turnover |

| Specific Provision Breaches | €15,000,000 or 3% global turnover |

| Misleading Information | €7,500,000 or 1% global turnover |

Data Governance and Ethical Considerations

AI must be developed with ethics in mind.

Certain activities are banned, including:

- Social scoring systems;

- Untargeted facial recognition;

- Emotional manipulation;

- Exploitative AI targeting vulnerable populations.

Your company needs to have strong AI governance plans.

This is to meet the complex rules and avoid legal trouble.

Impact on Business Operations and Compliance Costs

Dealing with AI legal risks is a big challenge for European businesses.

The EU AI Act brings new rules that affect how you run your business and your budget.

Small businesses find it hard to meet the new AI rules.

EU studies say one high-risk AI product could cost up to €400,000 to comply with.

These costs cover many areas:

- Quality management system implementation;

- Risk assessment documentation;

- Transparency reporting;

- Ongoing compliance monitoring.

Breaking AI rules in Europe can be very costly.

Fines can be up to 7% of global sales or €35 million.

Breaches of AI ethics also have serious effects beyond money.

Companies need to check their AI systems carefully.

They should:

- Do thorough risk assessments;

- Keep detailed records;

- Have clear AI rules;

- Keep checking for compliance.

Even though following these rules costs money, it can help you stay ahead.

Companies that follow these rules will earn more trust from customers.

They will also show they are responsible in the changing European rules.

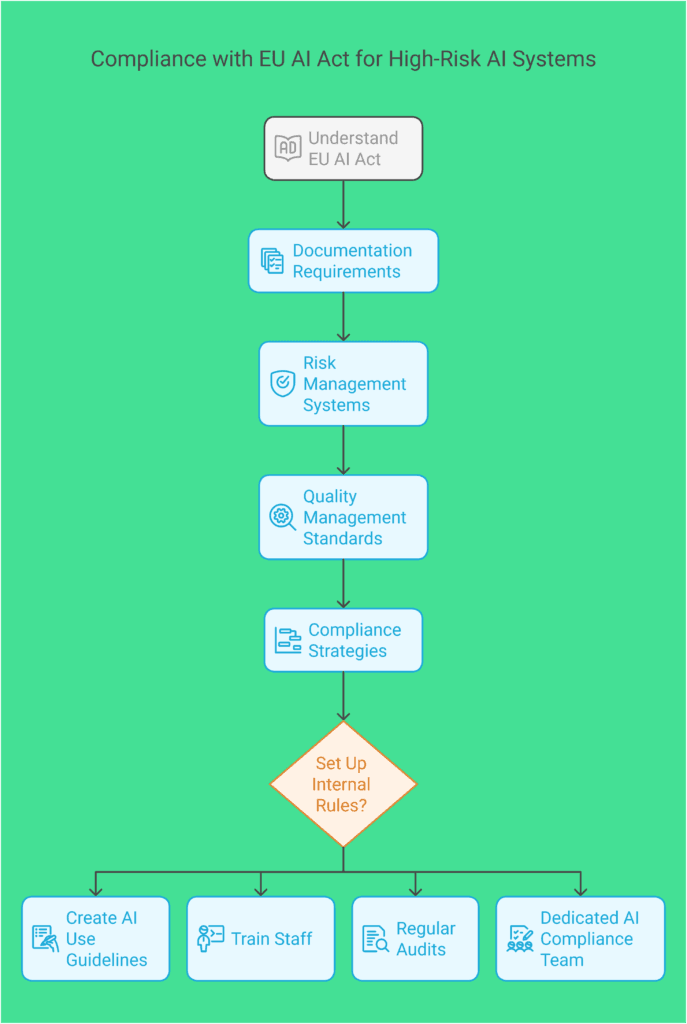

Requirements for High-Risk AI Systems and Transparency Obligations

The EU AI Act sets clear rules for managing high-risk AI systems.

It focuses on AI accountability EU and artificial intelligence governance.

These rules aim to stop unethical AI use by requiring clear transparency and documentation.

Understanding AI regulations is key for companies working with high-risk AI systems.

It’s important to know the main rules to follow.

Documentation Requirements

Your company must keep detailed records of AI system development.

These records should cover the whole life cycle of the AI.

They should include:

- Comprehensive system description;

- Development methodology;

- Training data verification;

- Performance metrics;

- Risk assessment records.

Risk Management Systems

Having a strong risk management system is vital for ai accountability.

Your system should find, check, and lower risks in AI use.

| Risk Management Component | Key Requirements |

|---|---|

| Identification | Comprehensive risk assessment |

| Evaluation | Quantitative and qualitative risk analysis |

| Mitigation | Proactive risk reduction strategies |

Quality Management Standards

The EU AI Act requires strict quality standards for high-risk AI systems.

Your quality system must keep checking, validating, and improving AI tech.

Following these rules shows your dedication to ethical AI development.

It also protects your company from fines under the eu ai act.

Compliance Strategies and Risk Mitigation

Understanding AI legal frameworks is complex.

Your company needs to act early to meet the EU AI Act’s standards by 2026.

This is key to avoiding legal issues.

Effective AI risk management strategies include:

- Conduct thorough risk assessments for all AI systems;

- Implement robust AI transparency protocols;

- Develop detailed records of AI development processes;

- Set up ongoing checks and evaluations.

AI oversight needs a detailed plan.

You must set up internal rules that follow the EU AI Act.

This means:

- Creating clear AI use guidelines;

- Training staff on legal rules;

- Carrying out regular audits;

- Having a dedicated AI compliance team.

There are big financial risks.

Fines can be up to €35 million or 7% of global sales.

Small businesses need to watch out for special penalty rules.

For expert advice on these rules, reach out to our Romanian Law Office.

Economic Impact of AI Regulation in the European Union

The European Union’s approach to AI governance is changing the digital world.

It has big economic effects.

Your business needs to get how the EU AI Act works.

This Act sets strict rules for AI.

It aims to make AI trustworthy while keeping innovation alive.

It’s all about finding a balance.

Experts say there will be big economic hurdles for EU businesses.

The Act’s rules will cost companies up to €36 billion.

Small businesses might struggle the most, needing to invest in complex risk management.

The impact goes beyond just the cost.

The EU is becoming a leader in responsible tech.

Your company can stand out by using ethical AI.

This could give you an edge in markets that value transparency.

Adapting to these new rules is key.

Companies that plan well for AI compliance will do better.

The rules push for tech that’s more responsible and focused on people.

FAQ

What are the key financial risks of non-compliance with the EU AI Act?

Not following the EU AI Act can lead to big fines.

These fines can be up to 7% of your global sales or €35 million, whichever is more.

These fines are meant to make companies follow AI rules in Europe.

How does the EU AI Act categorize AI systems for regulatory purposes?

The Act sorts AI systems by risk level.

High-risk systems, like those in critical areas, have strict rules.

The level of risk decides the rules and fines for each AI system.

What constitutes a transparency violation under the EU AI Act?

Not being clear when using an AI system is a big no-no.

It’s also wrong to hide how an AI works or what it can do.

These mistakes can lead to big fines and show the EU’s focus on AI fairness.

How will the EU AI Act impact small and medium-sized enterprises (SMEs)?

SMEs get special help under the Act.

They get easier ways to follow the rules and might get support.

But, they must also make sure their AI systems are up to par.

What are the primary prohibited AI practices under the regulation?

The Act bans AI that’s too risky.

This includes systems that identify people in real-time, score people, or manipulate them.

Breaking these rules can lead to the biggest fines.

How can businesses prepare for compliance with the EU AI Act?

To get ready, do a thorough AI check, set up good risk management, and be open about AI development.

Also, train staff on AI ethics and keep an eye on compliance.

Getting legal advice can also help a lot.

What are the key documentation requirements for high-risk AI systems?

High-risk AI systems need lots of records.

This includes risk checks, how well the AI works, and data used to train it.

These records help keep AI use honest and open.

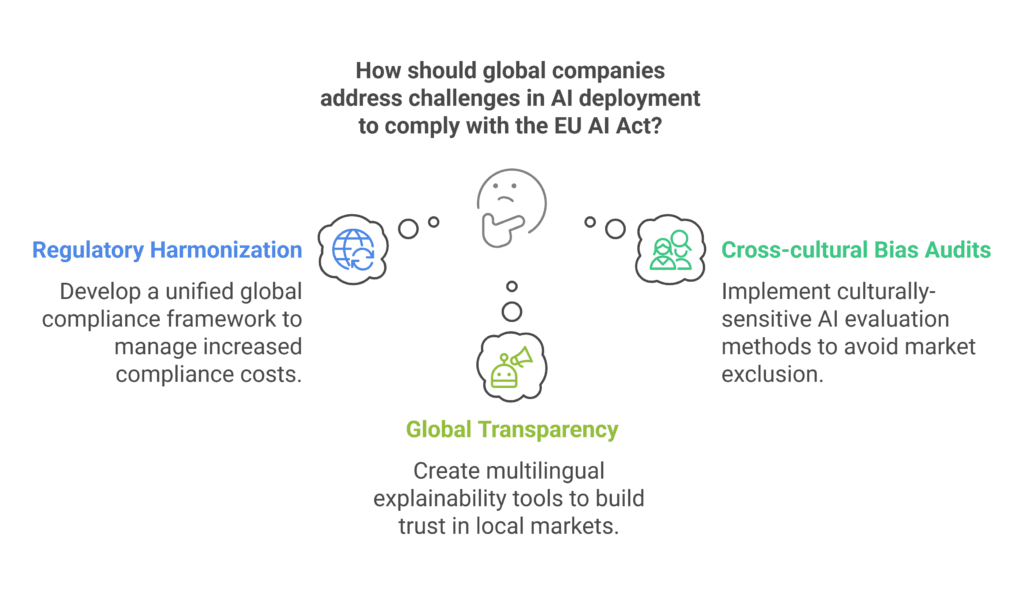

How does the EU AI Act compare to other global AI regulations?

The EU AI Act is the most detailed AI rule globally.

It’s known for its focus on risk, ethics, and big fines for breaking the rules.

It might set a standard for AI rules around the world.

What are the possible long-term economic benefits of these regulations?

At first, following these rules might cost a lot.

But, they aim to make AI trustworthy.

This could give European companies an edge.

The EU wants to encourage innovation and trust in AI.

How will penalties be calculated under the EU AI Act?

Fines will depend on how serious the mistake is.

They could be a percentage of sales or a fixed amount.

The exact fine will look at the mistake, if it was on purpose, and how much harm it caused.

What is the EU AI Act?

The EU AI Act is a comprehensive regulatory framework designed by the European Union to govern the use and development of artificial intelligence within its member states.

This act categorizes ai systems into different risk levels, ensuring that high-risk AI systems are subject to strict compliance measures.

The AI Act aims to promote innovation while safeguarding fundamental rights and societal values, reflecting the EU’s commitment to ethical AI governance.

What are high-risk ai systems?

High-risk AI systems are defined under the EU AI Act as those that can significantly impact people’s lives, such as systems used in critical infrastructure, education, employment, law enforcement, and biometric identification.

These systems must comply with rigorous standards of transparency, accountability, and ethical considerations to mitigate potential risks to fundamental rights and ensure public safety.

What are the penalties for non-compliance with the EU AI Act?

The EU AI Act outlines significant penalties for non-compliance, which can include fines based on the annual turnover of the offending organization.

For serious violations, the penalties can reach up to 6% of the global revenue or €30 million, whichever is higher.

This stringent approach underscores the importance of adhering to the regulations set forth to promote safe and responsible AI practices.

What is considered non-compliance with the prohibition?

Non-compliance with the prohibition refers to the failure to adhere to specific restrictions imposed by the EU AI Act, particularly those regarding prohibited ai practices.

Examples include the use of social scoring systems or deploying AI models without sufficient transparency measures.

Organizations found in violation may face severe penalties, emphasizing the need for strict compliance with the regulatory framework.

What types of ai practices are prohibited under the EU AI Act?

The EU AI Act identifies several prohibited AI practices that pose a threat to fundamental rights and public safety.

These include systems that manipulate human behavior, exploit vulnerabilities.

Market Channels for AI and ML Software Development Services in Romania

Market Channels for AI and ML Software Development Services in Romania